# Custom configurations

Embedchain offers several configuration options for your LLM, vector database, and embedding model. All of these configuration options are optional and have sane defaults.

You can configure different components of your app (`llm`, `embedding model`, or `vector database`) through a simple yaml configuration that Embedchain offers. Here is a generic full-stack example of the yaml config:

Embedchain applications are configurable using YAML file, JSON file or by directly passing the config dictionary. Checkout the [docs here](/api-reference/app/overview#usage) on how to use other formats.

```yaml config.yaml

app:

config:

name: 'full-stack-app'

llm:

provider: openai

config:

model: 'gpt-4o-mini'

temperature: 0.5

max_tokens: 1000

top_p: 1

stream: false

api_key: sk-xxx

model_kwargs:

response_format:

type: json_object

api_version: 2024-02-01

http_client_proxies: http://testproxy.mem0.net:8000

prompt: |

Use the following pieces of context to answer the query at the end.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

$context

Query: $query

Helpful Answer:

system_prompt: |

Act as William Shakespeare. Answer the following questions in the style of William Shakespeare.

vectordb:

provider: chroma

config:

collection_name: 'full-stack-app'

dir: db

allow_reset: true

embedder:

provider: openai

config:

model: 'text-embedding-ada-002'

api_key: sk-xxx

http_client_proxies: http://testproxy.mem0.net:8000

chunker:

chunk_size: 2000

chunk_overlap: 100

length_function: 'len'

min_chunk_size: 0

cache:

similarity_evaluation:

strategy: distance

max_distance: 1.0

config:

similarity_threshold: 0.8

auto_flush: 50

```

```json config.json

{

"app": {

"config": {

"name": "full-stack-app"

}

},

"llm": {

"provider": "openai",

"config": {

"model": "gpt-4o-mini",

"temperature": 0.5,

"max_tokens": 1000,

"top_p": 1,

"stream": false,

"prompt": "Use the following pieces of context to answer the query at the end.\nIf you don't know the answer, just say that you don't know, don't try to make up an answer.\n$context\n\nQuery: $query\n\nHelpful Answer:",

"system_prompt": "Act as William Shakespeare. Answer the following questions in the style of William Shakespeare.",

"api_key": "sk-xxx",

"model_kwargs": {"response_format": {"type": "json_object"}},

"api_version": "2024-02-01",

"http_client_proxies": "http://testproxy.mem0.net:8000",

}

},

"vectordb": {

"provider": "chroma",

"config": {

"collection_name": "full-stack-app",

"dir": "db",

"allow_reset": true

}

},

"embedder": {

"provider": "openai",

"config": {

"model": "text-embedding-ada-002",

"api_key": "sk-xxx",

"http_client_proxies": "http://testproxy.mem0.net:8000",

}

},

"chunker": {

"chunk_size": 2000,

"chunk_overlap": 100,

"length_function": "len",

"min_chunk_size": 0

},

"cache": {

"similarity_evaluation": {

"strategy": "distance",

"max_distance": 1.0,

},

"config": {

"similarity_threshold": 0.8,

"auto_flush": 50,

},

},

}

```

```python config.py

config = {

'app': {

'config': {

'name': 'full-stack-app'

}

},

'llm': {

'provider': 'openai',

'config': {

'model': 'gpt-4o-mini',

'temperature': 0.5,

'max_tokens': 1000,

'top_p': 1,

'stream': False,

'prompt': (

"Use the following pieces of context to answer the query at the end.\n"

"If you don't know the answer, just say that you don't know, don't try to make up an answer.\n"

"$context\n\nQuery: $query\n\nHelpful Answer:"

),

'system_prompt': (

"Act as William Shakespeare. Answer the following questions in the style of William Shakespeare."

),

'api_key': 'sk-xxx',

"model_kwargs": {"response_format": {"type": "json_object"}},

"http_client_proxies": "http://testproxy.mem0.net:8000",

}

},

'vectordb': {

'provider': 'chroma',

'config': {

'collection_name': 'full-stack-app',

'dir': 'db',

'allow_reset': True

}

},

'embedder': {

'provider': 'openai',

'config': {

'model': 'text-embedding-ada-002',

'api_key': 'sk-xxx',

"http_client_proxies": "http://testproxy.mem0.net:8000",

}

},

'chunker': {

'chunk_size': 2000,

'chunk_overlap': 100,

'length_function': 'len',

'min_chunk_size': 0

},

'cache': {

'similarity_evaluation': {

'strategy': 'distance',

'max_distance': 1.0,

},

'config': {

'similarity_threshold': 0.8,

'auto_flush': 50,

},

},

}

```

Alright, let's dive into what each key means in the yaml config above:

1. `app` Section:

* `config`:

* `name` (String): The name of your full-stack application.

* `id` (String): The id of your full-stack application.

Only use this to reload already created apps. We recommend users not to create their own ids.

* `collect_metrics` (Boolean): Indicates whether metrics should be collected for the app, defaults to `True`

* `log_level` (String): The log level for the app, defaults to `WARNING`

2. `llm` Section:

* `provider` (String): The provider for the language model, which is set to 'openai'. You can find the full list of llm providers in [our docs](/components/llms).

* `config`:

* `model` (String): The specific model being used, 'gpt-4o-mini'.

* `temperature` (Float): Controls the randomness of the model's output. A higher value (closer to 1) makes the output more random.

* `max_tokens` (Integer): Controls how many tokens are used in the response.

* `top_p` (Float): Controls the diversity of word selection. A higher value (closer to 1) makes word selection more diverse.

* `stream` (Boolean): Controls if the response is streamed back to the user (set to false).

* `online` (Boolean): Controls whether to use internet to get more context for answering query (set to false).

* `token_usage` (Boolean): Controls whether to use token usage for the querying models (set to false).

* `prompt` (String): A prompt for the model to follow when generating responses, requires `$context` and `$query` variables.

* `system_prompt` (String): A system prompt for the model to follow when generating responses, in this case, it's set to the style of William Shakespeare.

* `number_documents` (Integer): Number of documents to pull from the vectordb as context, defaults to 1

* `api_key` (String): The API key for the language model.

* `model_kwargs` (Dict): Keyword arguments to pass to the language model. Used for `aws_bedrock` provider, since it requires different arguments for each model.

* `http_client_proxies` (Dict | String): The proxy server settings used to create `self.http_client` using `httpx.Client(proxies=http_client_proxies)`

* `http_async_client_proxies` (Dict | String): The proxy server settings for async calls used to create `self.http_async_client` using `httpx.AsyncClient(proxies=http_async_client_proxies)`

3. `vectordb` Section:

* `provider` (String): The provider for the vector database, set to 'chroma'. You can find the full list of vector database providers in [our docs](/components/vector-databases).

* `config`:

* `collection_name` (String): The initial collection name for the vectordb, set to 'full-stack-app'.

* `dir` (String): The directory for the local database, set to 'db'.

* `allow_reset` (Boolean): Indicates whether resetting the vectordb is allowed, set to true.

* `batch_size` (Integer): The batch size for docs insertion in vectordb, defaults to `100`

We recommend you to checkout vectordb specific config [here](https://docs.embedchain.ai/components/vector-databases)

4. `embedder` Section:

* `provider` (String): The provider for the embedder, set to 'openai'. You can find the full list of embedding model providers in [our docs](/components/embedding-models).

* `config`:

* `model` (String): The specific model used for text embedding, 'text-embedding-ada-002'.

* `vector_dimension` (Integer): The vector dimension of the embedding model. [Defaults](https://github.com/embedchain/embedchain/blob/main/embedchain/models/vector_dimensions.py)

* `api_key` (String): The API key for the embedding model.

* `endpoint` (String): The endpoint for the HuggingFace embedding model.

* `deployment_name` (String): The deployment name for the embedding model.

* `title` (String): The title for the embedding model for Google Embedder.

* `task_type` (String): The task type for the embedding model for Google Embedder.

* `model_kwargs` (Dict): Used to pass extra arguments to embedders.

* `http_client_proxies` (Dict | String): The proxy server settings used to create `self.http_client` using `httpx.Client(proxies=http_client_proxies)`

* `http_async_client_proxies` (Dict | String): The proxy server settings for async calls used to create `self.http_async_client` using `httpx.AsyncClient(proxies=http_async_client_proxies)`

5. `chunker` Section:

* `chunk_size` (Integer): The size of each chunk of text that is sent to the language model.

* `chunk_overlap` (Integer): The amount of overlap between each chunk of text.

* `length_function` (String): The function used to calculate the length of each chunk of text. In this case, it's set to 'len'. You can also use any function import directly as a string here.

* `min_chunk_size` (Integer): The minimum size of each chunk of text that is sent to the language model. Must be less than `chunk_size`, and greater than `chunk_overlap`.

6. `cache` Section: (Optional)

* `similarity_evaluation` (Optional): The config for similarity evaluation strategy. If not provided, the default `distance` based similarity evaluation strategy is used.

* `strategy` (String): The strategy to use for similarity evaluation. Currently, only `distance` and `exact` based similarity evaluation is supported. Defaults to `distance`.

* `max_distance` (Float): The bound of maximum distance. Defaults to `1.0`.

* `positive` (Boolean): If the larger distance indicates more similar of two entities, set it `True`, otherwise `False`. Defaults to `False`.

* `config` (Optional): The config for initializing the cache. If not provided, sensible default values are used as mentioned below.

* `similarity_threshold` (Float): The threshold for similarity evaluation. Defaults to `0.8`.

* `auto_flush` (Integer): The number of queries after which the cache is flushed. Defaults to `20`.

7. `memory` Section: (Optional)

* `top_k` (Integer): The number of top-k results to return. Defaults to `10`.

If you provide a cache section, the app will automatically configure and use a cache to store the results of the language model. This is useful if you want to speed up the response time and save inference cost of your app.

If you have questions about the configuration above, please feel free to reach out to us using one of the following methods:

# 📊 add

`add()` method is used to load the data sources from different data sources to a RAG pipeline. You can find the signature below:

### Parameters

The data to embed, can be a URL, local file or raw content, depending on the data type.. You can find the full list of supported data sources [here](/components/data-sources/overview).

Type of data source. It can be automatically detected but user can force what data type to load as.

Any metadata that you want to store with the data source. Metadata is generally really useful for doing metadata filtering on top of semantic search to yield faster search and better results.

This parameter instructs Embedchain to retrieve all the context and information from the specified link, as well as from any reference links on the page.

## Usage

### Load data from webpage

```python Code example

from embedchain import App

app = App()

app.add("https://www.forbes.com/profile/elon-musk")

# Inserting batches in chromadb: 100%|███████████████| 1/1 [00:00<00:00, 1.19it/s]

# Successfully saved https://www.forbes.com/profile/elon-musk (DataType.WEB_PAGE). New chunks count: 4

```

### Load data from sitemap

```python Code example

from embedchain import App

app = App()

app.add("https://python.langchain.com/sitemap.xml", data_type="sitemap")

# Loading pages: 100%|█████████████| 1108/1108 [00:47<00:00, 23.17it/s]

# Inserting batches in chromadb: 100%|█████████| 111/111 [04:41<00:00, 2.54s/it]

# Successfully saved https://python.langchain.com/sitemap.xml (DataType.SITEMAP). New chunks count: 11024

```

You can find complete list of supported data sources [here](/components/data-sources/overview).

# 💬 chat

`chat()` method allows you to chat over your data sources using a user-friendly chat API. You can find the signature below:

### Parameters

Question to ask

Configure different llm settings such as prompt, temprature, number\_documents etc.

The purpose is to test the prompt structure without actually running LLM inference. Defaults to `False`

A dictionary of key-value pairs to filter the chunks from the vector database. Defaults to `None`

Session ID of the chat. This can be used to maintain chat history of different user sessions. Default value: `default`

Return citations along with the LLM answer. Defaults to `False`

### Returns

If `citations=False`, return a stringified answer to the question asked.

If `citations=True`, returns a tuple with answer and citations respectively.

## Usage

### With citations

If you want to get the answer to question and return both answer and citations, use the following code snippet:

```python With Citations

from embedchain import App

# Initialize app

app = App()

# Add data source

app.add("https://www.forbes.com/profile/elon-musk")

# Get relevant answer for your query

answer, sources = app.chat("What is the net worth of Elon?", citations=True)

print(answer)

# Answer: The net worth of Elon Musk is $221.9 billion.

print(sources)

# [

# (

# 'Elon Musk PROFILEElon MuskCEO, Tesla$247.1B$2.3B (0.96%)Real Time Net Worthas of 12/7/23 ...',

# {

# 'url': 'https://www.forbes.com/profile/elon-musk',

# 'score': 0.89,

# ...

# }

# ),

# (

# '74% of the company, which is now called X.Wealth HistoryHOVER TO REVEAL NET WORTH BY YEARForbes ...',

# {

# 'url': 'https://www.forbes.com/profile/elon-musk',

# 'score': 0.81,

# ...

# }

# ),

# (

# 'founded in 2002, is worth nearly $150 billion after a $750 million tender offer in June 2023 ...',

# {

# 'url': 'https://www.forbes.com/profile/elon-musk',

# 'score': 0.73,

# ...

# }

# )

# ]

```

When `citations=True`, note that the returned `sources` are a list of tuples where each tuple has two elements (in the following order):

1. source chunk

2. dictionary with metadata about the source chunk

* `url`: url of the source

* `doc_id`: document id (used for book keeping purposes)

* `score`: score of the source chunk with respect to the question

* other metadata you might have added at the time of adding the source

### Without citations

If you just want to return answers and don't want to return citations, you can use the following example:

```python Without Citations

from embedchain import App

# Initialize app

app = App()

# Add data source

app.add("https://www.forbes.com/profile/elon-musk")

# Chat on your data using `.chat()`

answer = app.chat("What is the net worth of Elon?")

print(answer)

# Answer: The net worth of Elon Musk is $221.9 billion.

```

### With session id

If you want to maintain chat sessions for different users, you can simply pass the `session_id` keyword argument. See the example below:

```python With session id

from embedchain import App

app = App()

app.add("https://www.forbes.com/profile/elon-musk")

# Chat on your data using `.chat()`

app.chat("What is the net worth of Elon Musk?", session_id="user1")

# 'The net worth of Elon Musk is $250.8 billion.'

app.chat("What is the net worth of Bill Gates?", session_id="user2")

# "I don't know the current net worth of Bill Gates."

app.chat("What was my last question", session_id="user1")

# 'Your last question was "What is the net worth of Elon Musk?"'

```

### With custom context window

If you want to customize the context window that you want to use during chat (default context window is 3 document chunks), you can do using the following code snippet:

```python with custom chunks size

from embedchain import App

from embedchain.config import BaseLlmConfig

app = App()

app.add("https://www.forbes.com/profile/elon-musk")

query_config = BaseLlmConfig(number_documents=5)

app.chat("What is the net worth of Elon Musk?", config=query_config)

```

### With Mem0 to store chat history

Mem0 is a cutting-edge long-term memory for LLMs to enable personalization for the GenAI stack. It enables LLMs to remember past interactions and provide more personalized responses.

In order to use Mem0 to enable memory for personalization in your apps:

* Install the [`mem0`](https://docs.mem0.ai/) package using `pip install mem0ai`.

* Prepare config for `memory`, refer [Configurations](docs/api-reference/advanced/configuration.mdx).

```python with mem0

from embedchain import App

config = {

"memory": {

"top_k": 5

}

}

app = App.from_config(config=config)

app.add("https://www.forbes.com/profile/elon-musk")

app.chat("What is the net worth of Elon Musk?")

```

## How Mem0 works:

* Mem0 saves context derived from each user question into its memory.

* When a user poses a new question, Mem0 retrieves relevant previous memories.

* The `top_k` parameter in the memory configuration specifies the number of top memories to consider during retrieval.

* Mem0 generates the final response by integrating the user's question, context from the data source, and the relevant memories.

# 🗑 delete

## Delete Document

`delete()` method allows you to delete a document previously added to the app.

### Usage

```python

from embedchain import App

app = App()

forbes_doc_id = app.add("https://www.forbes.com/profile/elon-musk")

wiki_doc_id = app.add("https://en.wikipedia.org/wiki/Elon_Musk")

app.delete(forbes_doc_id) # deletes the forbes document

```

If you do not have the document id, you can use `app.db.get()` method to get the document and extract the `hash` key from `metadatas` dictionary object, which serves as the document id.

## Delete Chat Session History

`delete_session_chat_history()` method allows you to delete all previous messages in a chat history.

### Usage

```python

from embedchain import App

app = App()

app.add("https://www.forbes.com/profile/elon-musk")

app.chat("What is the net worth of Elon Musk?")

app.delete_session_chat_history()

```

`delete_session_chat_history(session_id="session_1")` method also accepts `session_id` optional param for deleting chat history of a specific session.

It assumes the default session if no `session_id` is provided.

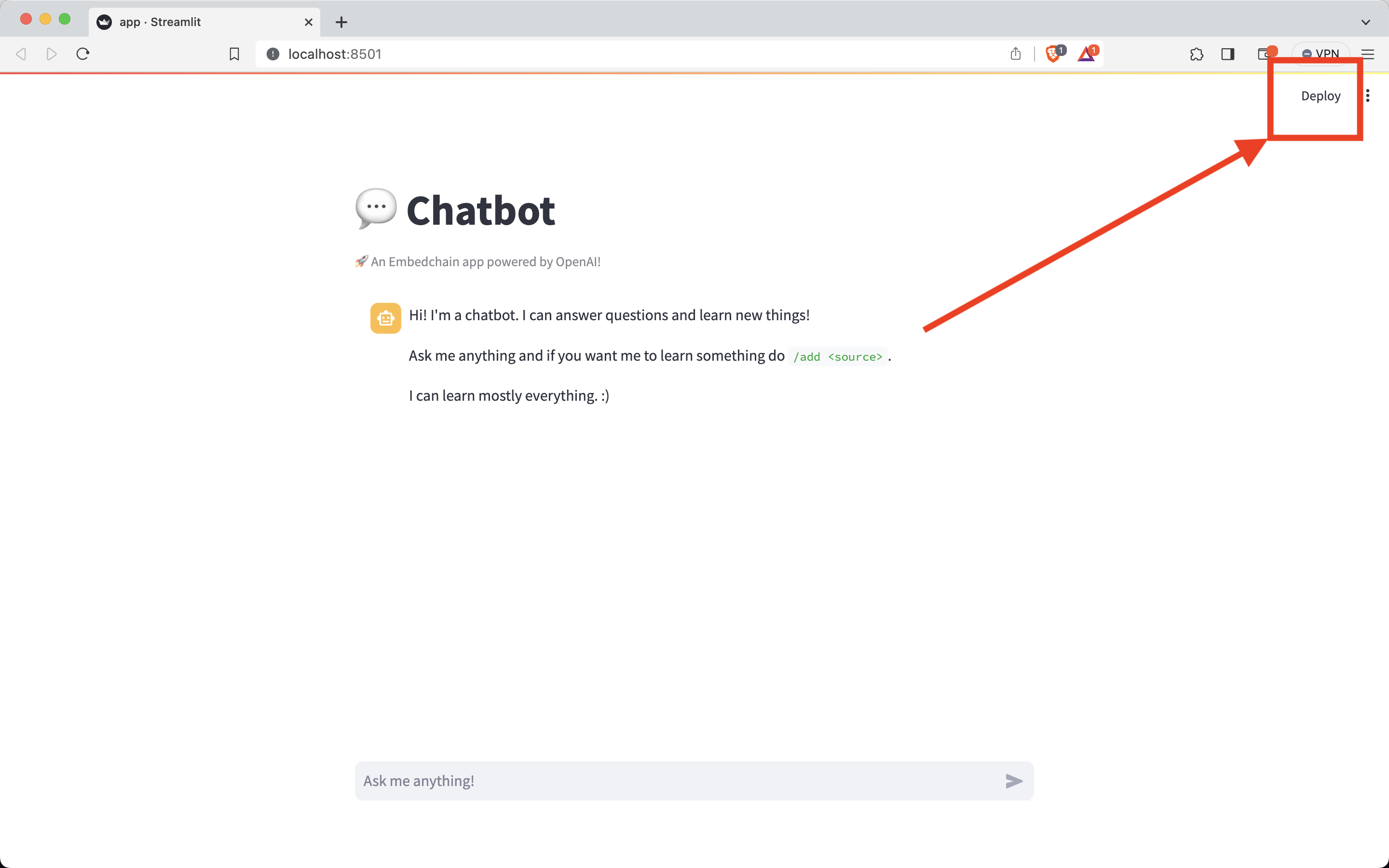

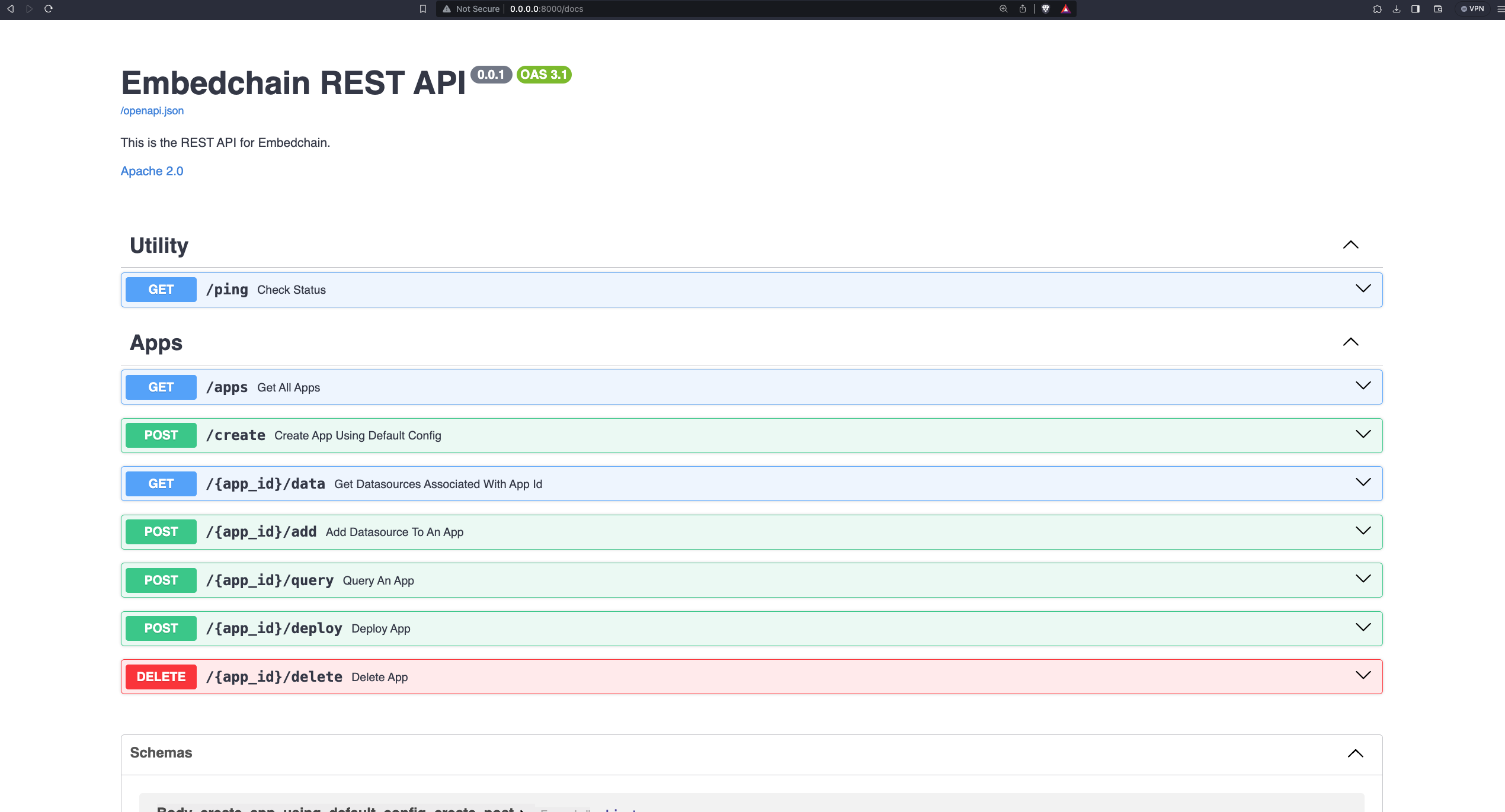

# 🚀 deploy

The `deploy()` method is currently available on an invitation-only basis. To request access, please submit your information via the provided [Google Form](https://forms.gle/vigN11h7b4Ywat668). We will review your request and respond promptly.

# 📝 evaluate

`evaluate()` method is used to evaluate the performance of a RAG app. You can find the signature below:

### Parameters

A question or a list of questions to evaluate your app on.

The metrics to evaluate your app on. Defaults to all metrics: `["context_relevancy", "answer_relevancy", "groundedness"]`

Specify the number of threads to use for parallel processing.

### Returns

Returns the metrics you have chosen to evaluate your app on as a dictionary.

## Usage

```python

from embedchain import App

app = App()

# add data source

app.add("https://www.forbes.com/profile/elon-musk")

# run evaluation

app.evaluate("what is the net worth of Elon Musk?")

# {'answer_relevancy': 0.958019958036268, 'context_relevancy': 0.12903225806451613}

# or

# app.evaluate(["what is the net worth of Elon Musk?", "which companies does Elon Musk own?"])

```

# 📄 get

## Get data sources

`get_data_sources()` returns a list of all the data sources added in the app.

### Usage

```python

from embedchain import App

app = App()

app.add("https://www.forbes.com/profile/elon-musk")

app.add("https://en.wikipedia.org/wiki/Elon_Musk")

data_sources = app.get_data_sources()

# [

# {

# 'data_type': 'web_page',

# 'data_value': 'https://en.wikipedia.org/wiki/Elon_Musk',

# 'metadata': 'null'

# },

# {

# 'data_type': 'web_page',

# 'data_value': 'https://www.forbes.com/profile/elon-musk',

# 'metadata': 'null'

# }

# ]

```

# App

Create a RAG app object on Embedchain. This is the main entrypoint for a developer to interact with Embedchain APIs. An app configures the llm, vector database, embedding model, and retrieval strategy of your choice.

### Attributes

App ID

Name of the app

Configuration of the app

Configured LLM for the RAG app

Configured vector database for the RAG app

Configured embedding model for the RAG app

Chunker configuration

Client object (used to deploy an app to Embedchain platform)

Logger object

## Usage

You can create an app instance using the following methods:

### Default setting

```python Code Example

from embedchain import App

app = App()

```

### Python Dict

```python Code Example

from embedchain import App

config_dict = {

'llm': {

'provider': 'gpt4all',

'config': {

'model': 'orca-mini-3b-gguf2-q4_0.gguf',

'temperature': 0.5,

'max_tokens': 1000,

'top_p': 1,

'stream': False

}

},

'embedder': {

'provider': 'gpt4all'

}

}

# load llm configuration from config dict

app = App.from_config(config=config_dict)

```

### YAML Config

```python main.py

from embedchain import App

# load llm configuration from config.yaml file

app = App.from_config(config_path="config.yaml")

```

```yaml config.yaml

llm:

provider: gpt4all

config:

model: 'orca-mini-3b-gguf2-q4_0.gguf'

temperature: 0.5

max_tokens: 1000

top_p: 1

stream: false

embedder:

provider: gpt4all

```

### JSON Config

```python main.py

from embedchain import App

# load llm configuration from config.json file

app = App.from_config(config_path="config.json")

```

```json config.json

{

"llm": {

"provider": "gpt4all",

"config": {

"model": "orca-mini-3b-gguf2-q4_0.gguf",

"temperature": 0.5,

"max_tokens": 1000,

"top_p": 1,

"stream": false

}

},

"embedder": {

"provider": "gpt4all"

}

}

```

# ❓ query

`.query()` method empowers developers to ask questions and receive relevant answers through a user-friendly query API. Function signature is given below:

### Parameters

Question to ask

Configure different llm settings such as prompt, temprature, number\_documents etc.

The purpose is to test the prompt structure without actually running LLM inference. Defaults to `False`

A dictionary of key-value pairs to filter the chunks from the vector database. Defaults to `None`

Return citations along with the LLM answer. Defaults to `False`

### Returns

If `citations=False`, return a stringified answer to the question asked.

If `citations=True`, returns a tuple with answer and citations respectively.

## Usage

### With citations

If you want to get the answer to question and return both answer and citations, use the following code snippet:

```python With Citations

from embedchain import App

# Initialize app

app = App()

# Add data source

app.add("https://www.forbes.com/profile/elon-musk")

# Get relevant answer for your query

answer, sources = app.query("What is the net worth of Elon?", citations=True)

print(answer)

# Answer: The net worth of Elon Musk is $221.9 billion.

print(sources)

# [

# (

# 'Elon Musk PROFILEElon MuskCEO, Tesla$247.1B$2.3B (0.96%)Real Time Net Worthas of 12/7/23 ...',

# {

# 'url': 'https://www.forbes.com/profile/elon-musk',

# 'score': 0.89,

# ...

# }

# ),

# (

# '74% of the company, which is now called X.Wealth HistoryHOVER TO REVEAL NET WORTH BY YEARForbes ...',

# {

# 'url': 'https://www.forbes.com/profile/elon-musk',

# 'score': 0.81,

# ...

# }

# ),

# (

# 'founded in 2002, is worth nearly $150 billion after a $750 million tender offer in June 2023 ...',

# {

# 'url': 'https://www.forbes.com/profile/elon-musk',

# 'score': 0.73,

# ...

# }

# )

# ]

```

When `citations=True`, note that the returned `sources` are a list of tuples where each tuple has two elements (in the following order):

1. source chunk

2. dictionary with metadata about the source chunk

* `url`: url of the source

* `doc_id`: document id (used for book keeping purposes)

* `score`: score of the source chunk with respect to the question

* other metadata you might have added at the time of adding the source

### Without citations

If you just want to return answers and don't want to return citations, you can use the following example:

```python Without Citations

from embedchain import App

# Initialize app

app = App()

# Add data source

app.add("https://www.forbes.com/profile/elon-musk")

# Get relevant answer for your query

answer = app.query("What is the net worth of Elon?")

print(answer)

# Answer: The net worth of Elon Musk is $221.9 billion.

```

# 🔄 reset

`reset()` method allows you to wipe the data from your RAG application and start from scratch.

## Usage

```python

from embedchain import App

app = App()

app.add("https://www.forbes.com/profile/elon-musk")

# Reset the app

app.reset()

```

# 🔍 search

`.search()` enables you to uncover the most pertinent context by performing a semantic search across your data sources based on a given query. Refer to the function signature below:

### Parameters

Question

Number of relevant documents to fetch. Defaults to `3`

Key value pair for metadata filtering.

Pass raw filter query based on your vector database.

Currently, `raw_filter` param is only supported for Pinecone vector database.

### Returns

Return list of dictionaries that contain the relevant chunk and their source information.

## Usage

### Basic

Refer to the following example on how to use the search api:

```python Code example

from embedchain import App

app = App()

app.add("https://www.forbes.com/profile/elon-musk")

context = app.search("What is the net worth of Elon?", num_documents=2)

print(context)

```

### Advanced

#### Metadata filtering using `where` params

Here is an advanced example of `search()` API with metadata filtering on pinecone database:

```python

import os

from embedchain import App

os.environ["PINECONE_API_KEY"] = "xxx"

config = {

"vectordb": {

"provider": "pinecone",

"config": {

"metric": "dotproduct",

"vector_dimension": 1536,

"index_name": "ec-test",

"serverless_config": {"cloud": "aws", "region": "us-west-2"},

},

}

}

app = App.from_config(config=config)

app.add("https://www.forbes.com/profile/bill-gates", metadata={"type": "forbes", "person": "gates"})

app.add("https://en.wikipedia.org/wiki/Bill_Gates", metadata={"type": "wiki", "person": "gates"})

results = app.search("What is the net worth of Bill Gates?", where={"person": "gates"})

print("Num of search results: ", len(results))

```

#### Metadata filtering using `raw_filter` params

Following is an example of metadata filtering by passing the raw filter query that pinecone vector database follows:

```python

import os

from embedchain import App

os.environ["PINECONE_API_KEY"] = "xxx"

config = {

"vectordb": {

"provider": "pinecone",

"config": {

"metric": "dotproduct",

"vector_dimension": 1536,

"index_name": "ec-test",

"serverless_config": {"cloud": "aws", "region": "us-west-2"},

},

}

}

app = App.from_config(config=config)

app.add("https://www.forbes.com/profile/bill-gates", metadata={"year": 2022, "person": "gates"})

app.add("https://en.wikipedia.org/wiki/Bill_Gates", metadata={"year": 2024, "person": "gates"})

print("Filter with person: gates and year > 2023")

raw_filter = {"$and": [{"person": "gates"}, {"year": {"$gt": 2023}}]}

results = app.search("What is the net worth of Bill Gates?", raw_filter=raw_filter)

print("Num of search results: ", len(results))

```

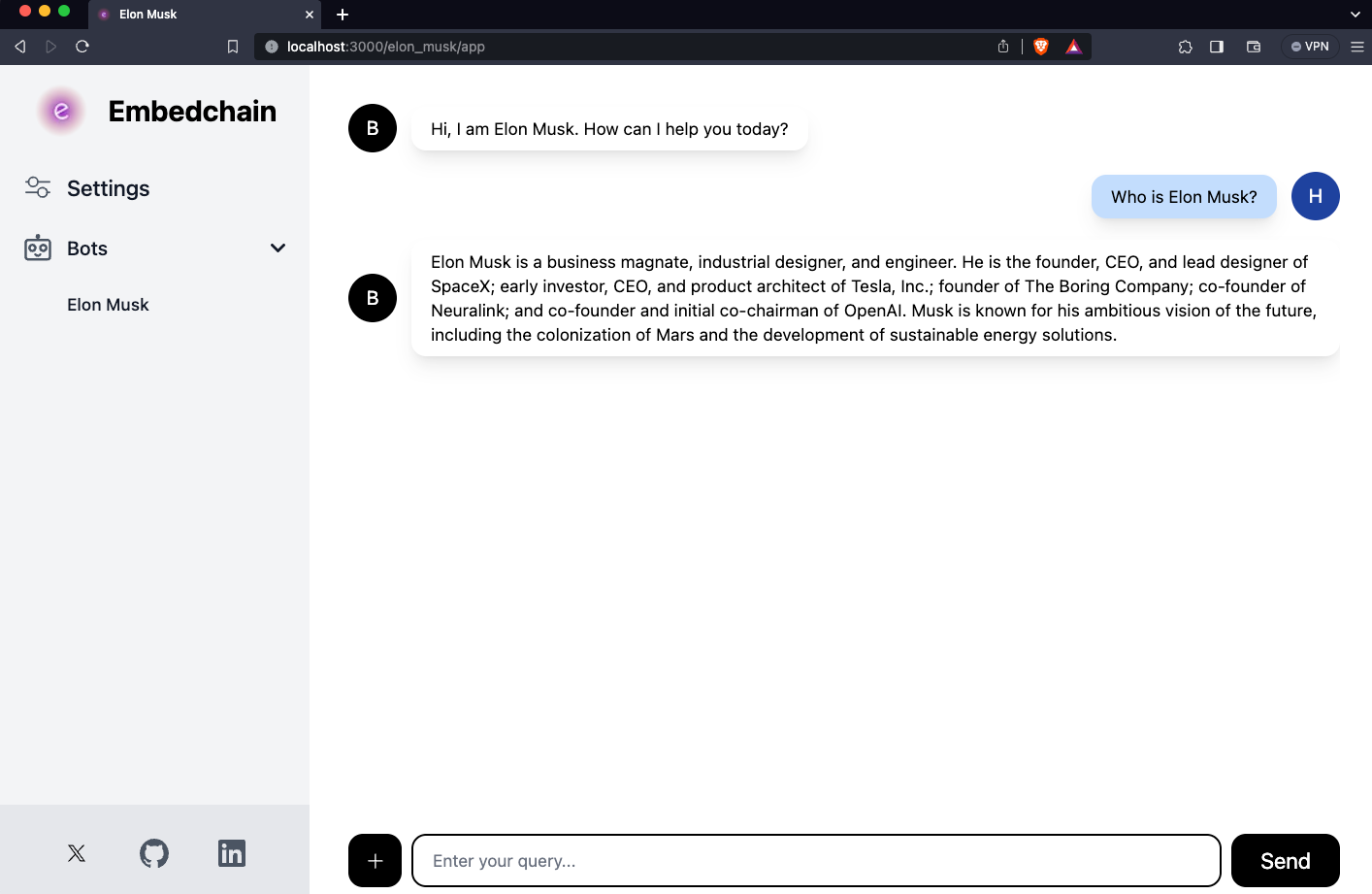

# AI Assistant

The `AIAssistant` class, an alternative to the OpenAI Assistant API, is designed for those who prefer using large language models (LLMs) other than those provided by OpenAI. It facilitates the creation of AI Assistants with several key benefits:

* **Visibility into Citations**: It offers transparent access to the sources and citations used by the AI, enhancing the understanding and trustworthiness of its responses.

* **Debugging Capabilities**: Users have the ability to delve into and debug the AI's processes, allowing for a deeper understanding and fine-tuning of its performance.

* **Customizable Prompts**: The class provides the flexibility to modify and tailor prompts according to specific needs, enabling more precise and relevant interactions.

* **Chain of Thought Integration**: It supports the incorporation of a 'chain of thought' approach, which helps in breaking down complex queries into simpler, sequential steps, thereby improving the clarity and accuracy of responses.

It is ideal for those who value customization, transparency, and detailed control over their AI Assistant's functionalities.

### Arguments

Name for your AI assistant

How the Assistant and model should behave or respond

Load existing AI Assistant. If you pass this, you don't have to pass other arguments.

Existing thread id if exists

Embedchain pipeline config yaml path to use. This will define the configuration of the AI Assistant (such as configuring the LLM, vector database, and embedding model)

Add data sources to your assistant. You can add in the following format: `[{"source": "https://example.com", "data_type": "web_page"}]`

Anonymous telemetry (doesn't collect any user information or user's files). Used to improve the Embedchain package utilization. Default is `True`.

## Usage

For detailed guidance on creating your own AI Assistant, click the link below. It provides step-by-step instructions to help you through the process:

Learn how to build a customized AI Assistant using the `AIAssistant` class.

# OpenAI Assistant

### Arguments

Name for your AI assistant

how the Assistant and model should behave or respond

Load existing OpenAI Assistant. If you pass this, you don't have to pass other arguments.

Existing OpenAI thread id if exists

OpenAI model to use

OpenAI tools to use. Default set to `[{"type": "retrieval"}]`

Add data sources to your assistant. You can add in the following format: `[{"source": "https://example.com", "data_type": "web_page"}]`

Anonymous telemetry (doesn't collect any user information or user's files). Used to improve the Embedchain package utilization. Default is `True`.

## Usage

For detailed guidance on creating your own OpenAI Assistant, click the link below. It provides step-by-step instructions to help you through the process:

Learn how to build an OpenAI Assistant using the `OpenAIAssistant` class.

# 🤝 Connect with Us

We believe in building a vibrant and supportive community around embedchain. There are various channels through which you can connect with us, stay updated, and contribute to the ongoing discussions:

Follow us on Twitter

Join our slack community

Join our discord community

Connect with us on LinkedIn

Schedule a call with Embedchain founder

Subscribe to our newsletter

We look forward to connecting with you and seeing how we can create amazing things together!

# 🎤 Audio

To use an audio as data source, just add `data_type` as `audio` and pass in the path of the audio (local or hosted).

We use [Deepgram](https://developers.deepgram.com/docs/introduction) to transcribe the audiot to text, and then use the generated text as the data source.

You would require an Deepgram API key which is available [here](https://console.deepgram.com/signup?jump=keys) to use this feature.

### Without customization

```python

import os

from embedchain import App

os.environ["DEEPGRAM_API_KEY"] = "153xxx"

app = App()

app.add("introduction.wav", data_type="audio")

response = app.query("What is my name and how old am I?")

print(response)

# Answer: Your name is Dave and you are 21 years old.

```

# 🐝 Beehiiv

To add any Beehiiv data sources to your app, just add the base url as the source and set the data\_type to `beehiiv`.

```python

from embedchain import App

app = App()

# source: just add the base url and set the data_type to 'beehiiv'

app.add('https://aibreakfast.beehiiv.com', data_type='beehiiv')

app.query("How much is OpenAI paying developers?")

# Answer: OpenAI is aggressively recruiting Google's top AI researchers with offers ranging between $5 to $10 million annually, primarily in stock options.

```

# 📊 CSV

You can load any csv file from your local file system or through a URL. Headers are included for each line, so if you have an `age` column, `18` will be added as `age: 18`.

## Usage

### Load from a local file

```python

from embedchain import App

app = App()

app.add('/path/to/file.csv', data_type='csv')

```

### Load from URL

```python

from embedchain import App

app = App()

app.add('https://people.sc.fsu.edu/~jburkardt/data/csv/airtravel.csv', data_type="csv")

```

There is a size limit allowed for csv file beyond which it can throw error. This limit is set by the LLMs. Please consider chunking large csv files into smaller csv files.

# ⚙️ Custom

When we say "custom", we mean that you can customize the loader and chunker to your needs. This is done by passing a custom loader and chunker to the `add` method.

```python

from embedchain import App

import your_loader

from my_module import CustomLoader

from my_module import CustomChunker

app = App()

loader = CustomLoader()

chunker = CustomChunker()

app.add("source", data_type="custom", loader=loader, chunker=chunker)

```

The custom loader and chunker must be a class that inherits from the [`BaseLoader`](https://github.com/embedchain/embedchain/blob/main/embedchain/loaders/base_loader.py) and [`BaseChunker`](https://github.com/embedchain/embedchain/blob/main/embedchain/chunkers/base_chunker.py) classes respectively.

If the `data_type` is not a valid data type, the `add` method will fallback to the `custom` data type and expect a custom loader and chunker to be passed by the user.

Example:

```python

from embedchain import App

from embedchain.loaders.github import GithubLoader

app = App()

loader = GithubLoader(config={"token": "ghp_xxx"})

app.add("repo:embedchain/embedchain type:repo", data_type="github", loader=loader)

app.query("What is Embedchain?")

# Answer: Embedchain is a Data Platform for Large Language Models (LLMs). It allows users to seamlessly load, index, retrieve, and sync unstructured data in order to build dynamic, LLM-powered applications. There is also a JavaScript implementation called embedchain-js available on GitHub.

```

# Data type handling

## Automatic data type detection

The add method automatically tries to detect the data\_type, based on your input for the source argument. So `app.add('https://www.youtube.com/watch?v=dQw4w9WgXcQ')` is enough to embed a YouTube video.

This detection is implemented for all formats. It is based on factors such as whether it's a URL, a local file, the source data type, etc.

### Debugging automatic detection

Set `log_level: DEBUG` in the config yaml to debug if the data type detection is done right or not. Otherwise, you will not know when, for instance, an invalid filepath is interpreted as raw text instead.

### Forcing a data type

To omit any issues with the data type detection, you can **force** a data\_type by adding it as a `add` method argument.

The examples below show you the keyword to force the respective `data_type`.

Forcing can also be used for edge cases, such as interpreting a sitemap as a web\_page, for reading its raw text instead of following links.

## Remote data types

**Use local files in remote data types**

Some data\_types are meant for remote content and only work with URLs.

You can pass local files by formatting the path using the `file:` [URI scheme](https://en.wikipedia.org/wiki/File_URI_scheme), e.g. `file:///info.pdf`.

## Reusing a vector database

Default behavior is to create a persistent vector db in the directory **./db**. You can split your application into two Python scripts: one to create a local vector db and the other to reuse this local persistent vector db. This is useful when you want to index hundreds of documents and separately implement a chat interface.

Create a local index:

```python

from embedchain import App

config = {

"app": {

"config": {

"id": "app-1"

}

}

}

naval_chat_bot = App.from_config(config=config)

naval_chat_bot.add("https://www.youtube.com/watch?v=3qHkcs3kG44")

naval_chat_bot.add("https://navalmanack.s3.amazonaws.com/Eric-Jorgenson_The-Almanack-of-Naval-Ravikant_Final.pdf")

```

You can reuse the local index with the same code, but without adding new documents:

```python

from embedchain import App

config = {

"app": {

"config": {

"id": "app-1"

}

}

}

naval_chat_bot = App.from_config(config=config)

print(naval_chat_bot.query("What unique capacity does Naval argue humans possess when it comes to understanding explanations or concepts?"))

```

## Resetting an app and vector database

You can reset the app by simply calling the `reset` method. This will delete the vector database and all other app related files.

```python

from embedchain import App

app = App()config = {

"app": {

"config": {

"id": "app-1"

}

}

}

naval_chat_bot = App.from_config(config=config)

app.add("https://www.youtube.com/watch?v=3qHkcs3kG44")

app.reset()

```

# 📁 Directory/Folder

To use an entire directory as data source, just add `data_type` as `directory` and pass in the path of the local directory.

### Without customization

```python

import os

from embedchain import App

os.environ["OPENAI_API_KEY"] = "sk-xxx"

app = App()

app.add("./elon-musk", data_type="directory")

response = app.query("list all files")

print(response)

# Answer: Files are elon-musk-1.txt, elon-musk-2.pdf.

```

### Customization

```python

import os

from embedchain import App

from embedchain.loaders.directory_loader import DirectoryLoader

os.environ["OPENAI_API_KEY"] = "sk-xxx"

lconfig = {

"recursive": True,

"extensions": [".txt"]

}

loader = DirectoryLoader(config=lconfig)

app = App()

app.add("./elon-musk", loader=loader)

response = app.query("what are all the files related to?")

print(response)

# Answer: The files are related to Elon Musk.

```

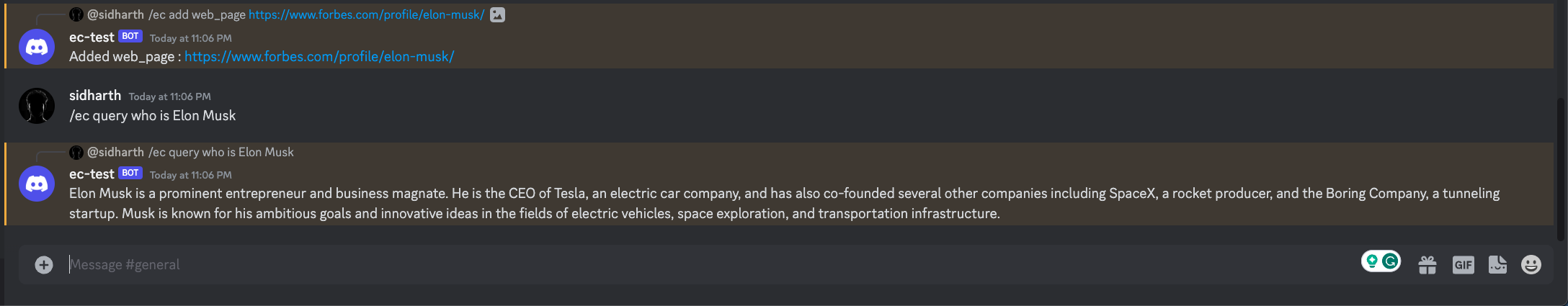

# 💬 Discord

To add any Discord channel messages to your app, just add the `channel_id` as the source and set the `data_type` to `discord`.

This loader requires a Discord bot token with read messages access.

To obtain the token, follow the instructions provided in this tutorial:

How to Get a Discord Bot Token?.

```python

import os

from embedchain import App

# add your discord "BOT" token

os.environ["DISCORD_TOKEN"] = "xxx"

app = App()

app.add("1177296711023075338", data_type="discord")

response = app.query("What is Joe saying about Elon Musk?")

print(response)

# Answer: Joe is saying "Elon Musk is a genius".

```

# 🗨️ Discourse

You can now easily load data from your community built with [Discourse](https://discourse.org/).

## Example

1. Setup the Discourse Loader with your community url.

```Python

from embedchain.loaders.discourse import DiscourseLoader

dicourse_loader = DiscourseLoader(config={"domain": "https://community.openai.com"})

```

2. Once you setup the loader, you can create an app and load data using the above discourse loader

```Python

import os

from embedchain.pipeline import Pipeline as App

os.environ["OPENAI_API_KEY"] = "sk-xxx"

app = App()

app.add("openai after:2023-10-1", data_type="discourse", loader=dicourse_loader)

question = "Where can I find the OpenAI API status page?"

app.query(question)

# Answer: You can find the OpenAI API status page at https:/status.openai.com/.

```

NOTE: The `add` function of the app will accept any executable search query to load data. Refer [Discourse API Docs](https://docs.discourse.org/#tag/Search) to learn more about search queries.

3. We automatically create a chunker to chunk your discourse data, however if you wish to provide your own chunker class. Here is how you can do that:

```Python

from embedchain.chunkers.discourse import DiscourseChunker

from embedchain.config.add_config import ChunkerConfig

discourse_chunker_config = ChunkerConfig(chunk_size=1000, chunk_overlap=0, length_function=len)

discourse_chunker = DiscourseChunker(config=discourse_chunker_config)

app.add("openai", data_type='discourse', loader=dicourse_loader, chunker=discourse_chunker)

```

# 📚 Code Docs website

To add any code documentation website as a loader, use the data\_type as `docs_site`. Eg:

```python

from embedchain import App

app = App()

app.add("https://docs.embedchain.ai/", data_type="docs_site")

app.query("What is Embedchain?")

# Answer: Embedchain is a platform that utilizes various components, including paid/proprietary ones, to provide what is believed to be the best configuration available. It uses LLM (Language Model) providers such as OpenAI, Anthpropic, Vertex_AI, GPT4ALL, Azure_OpenAI, LLAMA2, JINA, Ollama, Together and COHERE. Embedchain allows users to import and utilize these LLM providers for their applications.'

```

# 📄 Docx file

### Docx file

To add any doc/docx file, use the data\_type as `docx`. `docx` allows remote urls and conventional file paths. Eg:

```python

from embedchain import App

app = App()

app.add('https://example.com/content/intro.docx', data_type="docx")

# Or add file using the local file path on your system

# app.add('content/intro.docx', data_type="docx")

app.query("Summarize the docx data?")

```

# 💾 Dropbox

To load folders or files from your Dropbox account, configure the `data_type` parameter as `dropbox` and specify the path to the desired file or folder, starting from the root directory of your Dropbox account.

For Dropbox access, an **access token** is required. Obtain this token by visiting [Dropbox Developer Apps](https://www.dropbox.com/developers/apps). There, create a new app and generate an access token for it.

Ensure your app has the following settings activated:

* In the Permissions section, enable `files.content.read` and `files.metadata.read`.

## Usage

Install the `dropbox` pypi package:

```bash

pip install dropbox

```

Following is an example of how to use the dropbox loader:

```python

import os

from embedchain import App

os.environ["DROPBOX_ACCESS_TOKEN"] = "sl.xxx"

os.environ["OPENAI_API_KEY"] = "sk-xxx"

app = App()

# any path from the root of your dropbox account, you can leave it "" for the root folder

app.add("/test", data_type="dropbox")

print(app.query("Which two celebrities are mentioned here?"))

# The two celebrities mentioned in the given context are Elon Musk and Jeff Bezos.

```

# 📝 Github

1. Setup the Github loader by configuring the Github account with username and personal access token (PAT). Check out [this](https://docs.github.com/en/enterprise-server@3.6/authentication/keeping-your-account-and-data-secure/managing-your-personal-access-tokens#creating-a-personal-access-token) link to learn how to create a PAT.

```Python

from embedchain.loaders.github import GithubLoader

loader = GithubLoader(

config={

"token":"ghp_xxxx"

}

)

```

2. Once you setup the loader, you can create an app and load data using the above Github loader

```Python

import os

from embedchain.pipeline import Pipeline as App

os.environ["OPENAI_API_KEY"] = "sk-xxxx"

app = App()

app.add("repo:embedchain/embedchain type:repo", data_type="github", loader=loader)

response = app.query("What is Embedchain?")

# Answer: Embedchain is a Data Platform for Large Language Models (LLMs). It allows users to seamlessly load, index, retrieve, and sync unstructured data in order to build dynamic, LLM-powered applications. There is also a JavaScript implementation called embedchain-js available on GitHub.

```

The `add` function of the app will accept any valid github query with qualifiers. It only supports loading github code, repository, issues and pull-requests.

You must provide qualifiers `type:` and `repo:` in the query. The `type:` qualifier can be a combination of `code`, `repo`, `pr`, `issue`, `branch`, `file`. The `repo:` qualifier must be a valid github repository name.

* `repo:embedchain/embedchain type:repo` - to load the repository

* `repo:embedchain/embedchain type:branch name:feature_test` - to load the branch of the repository

* `repo:embedchain/embedchain type:file path:README.md` - to load the specific file of the repository

* `repo:embedchain/embedchain type:issue,pr` - to load the issues and pull-requests of the repository

* `repo:embedchain/embedchain type:issue state:closed` - to load the closed issues of the repository

3. We automatically create a chunker to chunk your GitHub data, however if you wish to provide your own chunker class. Here is how you can do that:

```Python

from embedchain.chunkers.common_chunker import CommonChunker

from embedchain.config.add_config import ChunkerConfig

github_chunker_config = ChunkerConfig(chunk_size=2000, chunk_overlap=0, length_function=len)

github_chunker = CommonChunker(config=github_chunker_config)

app.add(load_query, data_type="github", loader=loader, chunker=github_chunker)

```

# 📬 Gmail

To use GmailLoader you must install the extra dependencies with `pip install --upgrade embedchain[gmail]`.

The `source` must be a valid Gmail search query, you can refer `https://support.google.com/mail/answer/7190?hl=en` to build a query.

To load Gmail messages, you MUST use the data\_type as `gmail`. Otherwise the source will be detected as simple `text`.

To use this you need to save `credentials.json` in the directory from where you will run the loader. Follow these steps to get the credentials

1. Go to the [Google Cloud Console](https://console.cloud.google.com/apis/credentials).

2. Create a project if you don't have one already.

3. Create an `OAuth Consent Screen` in the project. You may need to select the `external` option.

4. Make sure the consent screen is published.

5. Enable the [Gmail API](https://console.cloud.google.com/apis/api/gmail.googleapis.com)

6. Create credentials from the `Credentials` tab.

7. Select the type `OAuth Client ID`.

8. Choose the application type `Web application`. As a name you can choose `embedchain` or any other name as per your use case.

9. Add an authorized redirect URI for `http://localhost:8080/`.

10. You can leave everything else at default, finish the creation.

11. When you are done, a modal opens where you can download the details in `json` format.

12. Put the `.json` file in your current directory and rename it to `credentials.json`

```python

from embedchain import App

app = App()

gmail_filter = "to: me label:inbox"

app.add(gmail_filter, data_type="gmail")

app.query("Summarize my email conversations")

```

# 🖼️ Image

To use an image as data source, just add `data_type` as `image` and pass in the path of the image (local or hosted).

We use [GPT4 Vision](https://platform.openai.com/docs/guides/vision) to generate meaning of the image using a custom prompt, and then use the generated text as the data source.

You would require an OpenAI API key with access to `gpt-4-vision-preview` model to use this feature.

### Without customization

```python

import os

from embedchain import App

os.environ["OPENAI_API_KEY"] = "sk-xxx"

app = App()

app.add("./Elon-Musk.webp", data_type="image")

response = app.query("Describe the man in the image.")

print(response)

# Answer: The man in the image is dressed in formal attire, wearing a dark suit jacket and a white collared shirt. He has short hair and is standing. He appears to be gazing off to the side with a reflective expression. The background is dark with faint, warm-toned vertical lines, possibly from a lit environment behind the individual or reflections. The overall atmosphere is somewhat moody and introspective.

```

### Customization

```python

import os

from embedchain import App

from embedchain.loaders.image import ImageLoader

image_loader = ImageLoader(

max_tokens=100,

api_key="sk-xxx",

prompt="Is the person looking wealthy? Structure your thoughts around what you see in the image.",

)

app = App()

app.add("./Elon-Musk.webp", data_type="image", loader=image_loader)

response = app.query("Describe the man in the image.")

print(response)

# Answer: The man in the image appears to be well-dressed in a suit and shirt, suggesting that he may be in a professional or formal setting. His composed demeanor and confident posture further indicate a sense of self-assurance. Based on these visual cues, one could infer that the man may have a certain level of economic or social status, possibly indicating wealth or professional success.

```

# 📃 JSON

To add any json file, use the data\_type as `json`. Headers are included for each line, so for example if you have a json like `{"age": 18}`, then it will be added as `age: 18`.

Here are the supported sources for loading `json`:

```

1. URL - valid url to json file that ends with ".json" extension.

2. Local file - valid url to local json file that ends with ".json" extension.

3. String - valid json string (e.g. - app.add('{"foo": "bar"}'))

```

If you would like to add other data structures (e.g. list, dict etc.), convert it to a valid json first using `json.dumps()` function.

## Example

```python python

from embedchain import App

app = App()

# Add json file

app.add("temp.json")

app.query("What is the net worth of Elon Musk as of October 2023?")

# As of October 2023, Elon Musk's net worth is $255.2 billion.

```

```json temp.json

{

"question": "What is your net worth, Elon Musk?",

"answer": "As of October 2023, Elon Musk's net worth is $255.2 billion, making him one of the wealthiest individuals in the world."

}

```

# 📝 Mdx file

To add any `.mdx` file to your app, use the data\_type (first argument to `.add()` method) as `mdx`. Note that this supports support mdx file present on machine, so this should be a file path. Eg:

```python

from embedchain import App

app = App()

app.add('path/to/file.mdx', data_type='mdx')

app.query("What are the docs about?")

```

# 🐬 MySQL

1. Setup the MySQL loader by configuring the SQL db.

```Python

from embedchain.loaders.mysql import MySQLLoader

config = {

"host": "host",

"port": "port",

"database": "database",

"user": "username",

"password": "password",

}

mysql_loader = MySQLLoader(config=config)

```

For more details on how to setup with valid config, check MySQL [documentation](https://dev.mysql.com/doc/connector-python/en/connector-python-connectargs.html).

2. Once you setup the loader, you can create an app and load data using the above MySQL loader

```Python

from embedchain.pipeline import Pipeline as App

app = App()

app.add("SELECT * FROM table_name;", data_type='mysql', loader=mysql_loader)

# Adds `(1, 'What is your net worth, Elon Musk?', "As of October 2023, Elon Musk's net worth is $255.2 billion.")`

response = app.query(question)

# Answer: As of October 2023, Elon Musk's net worth is $255.2 billion.

```

NOTE: The `add` function of the app will accept any executable query to load data. DO NOT pass the `CREATE`, `INSERT` queries in `add` function.

3. We automatically create a chunker to chunk your SQL data, however if you wish to provide your own chunker class. Here is how you can do that:

```Python

from embedchain.chunkers.mysql import MySQLChunker

from embedchain.config.add_config import ChunkerConfig

mysql_chunker_config = ChunkerConfig(chunk_size=1000, chunk_overlap=0, length_function=len)

mysql_chunker = MySQLChunker(config=mysql_chunker_config)

app.add("SELECT * FROM table_name;", data_type='mysql', loader=mysql_loader, chunker=mysql_chunker)

```

# 📓 Notion

To use notion you must install the extra dependencies with `pip install --upgrade embedchain[community]`.

To load a notion page, use the data\_type as `notion`. Since it is hard to automatically detect, it is advised to specify the `data_type` when adding a notion document.

The next argument must **end** with the `notion page id`. The id is a 32-character string. Eg:

```python

from embedchain import App

app = App()

app.add("cfbc134ca6464fc980d0391613959196", data_type="notion")

app.add("my-page-cfbc134ca6464fc980d0391613959196", data_type="notion")

app.add("https://www.notion.so/my-page-cfbc134ca6464fc980d0391613959196", data_type="notion")

app.query("Summarize the notion doc")

```

# 🙌 OpenAPI

To add any OpenAPI spec yaml file (currently the json file will be detected as JSON data type), use the data\_type as 'openapi'. 'openapi' allows remote urls and conventional file paths.

```python

from embedchain import App

app = App()

app.add("https://github.com/openai/openai-openapi/blob/master/openapi.yaml", data_type="openapi")

# Or add using the local file path

# app.add("configs/openai_openapi.yaml", data_type="openapi")

app.query("What can OpenAI API endpoint do? Can you list the things it can learn from?")

# Answer: The OpenAI API endpoint allows users to interact with OpenAI's models and perform various tasks such as generating text, answering questions, summarizing documents, translating languages, and more. The specific capabilities and tasks that the API can learn from may vary depending on the models and features provided by OpenAI. For more detailed information, it is recommended to refer to the OpenAI API documentation at https://platform.openai.com/docs/api-reference.

```

The yaml file added to the App must have the required OpenAPI fields otherwise the adding OpenAPI spec will fail. Please refer to [OpenAPI Spec Doc](https://spec.openapis.org/oas/v3.1.0)

# Overview

Embedchain comes with built-in support for various data sources. We handle the complexity of loading unstructured data from these data sources, allowing you to easily customize your app through a user-friendly interface.

# 📰 PDF

You can load any pdf file from your local file system or through a URL.

## Usage

### Load from a local file

```python

from embedchain import App

app = App()

app.add('/path/to/file.pdf', data_type='pdf_file')

```

### Load from URL

```python

from embedchain import App

app = App()

app.add('https://arxiv.org/pdf/1706.03762.pdf', data_type='pdf_file')

app.query("What is the paper 'attention is all you need' about?", citations=True)

# Answer: The paper "Attention Is All You Need" proposes a new network architecture called the Transformer, which is based solely on attention mechanisms. It suggests that complex recurrent or convolutional neural networks can be replaced with a simpler architecture that connects the encoder and decoder through attention. The paper discusses how this approach can improve sequence transduction models, such as neural machine translation.

# Contexts:

# [

# (

# 'Provided proper attribution is ...',

# {

# 'page': 0,

# 'url': 'https://arxiv.org/pdf/1706.03762.pdf',

# 'score': 0.3676220203221626,

# ...

# }

# ),

# ]

```

We also store the page number under the key `page` with each chunk that helps understand where the answer is coming from. You can fetch the `page` key while during retrieval (refer to the example given above).

Note that we do not support password protected pdf files.

# 🐘 Postgres

1. Setup the Postgres loader by configuring the postgres db.

```Python

from embedchain.loaders.postgres import PostgresLoader

config = {

"host": "host_address",

"port": "port_number",

"dbname": "database_name",

"user": "username",

"password": "password",

}

"""

config = {

"url": "your_postgres_url"

}

"""

postgres_loader = PostgresLoader(config=config)

```

You can either setup the loader by passing the postgresql url or by providing the config data.

For more details on how to setup with valid url and config, check postgres [documentation](https://www.postgresql.org/docs/current/libpq-connect.html#LIBPQ-CONNSTRING:~:text=34.1.1.%C2%A0Connection%20Strings-,%23,-Several%20libpq%20functions).

NOTE: if you provide the `url` field in config, all other fields will be ignored.

2. Once you setup the loader, you can create an app and load data using the above postgres loader

```Python

import os

from embedchain.pipeline import Pipeline as App

os.environ["OPENAI_API_KEY"] = "sk-xxx"

app = App()

question = "What is Elon Musk's networth?"

response = app.query(question)

# Answer: As of September 2021, Elon Musk's net worth is estimated to be around $250 billion, making him one of the wealthiest individuals in the world. However, please note that net worth can fluctuate over time due to various factors such as stock market changes and business ventures.

app.add("SELECT * FROM table_name;", data_type='postgres', loader=postgres_loader)

# Adds `(1, 'What is your net worth, Elon Musk?', "As of October 2023, Elon Musk's net worth is $255.2 billion.")`

response = app.query(question)

# Answer: As of October 2023, Elon Musk's net worth is $255.2 billion.

```

NOTE: The `add` function of the app will accept any executable query to load data. DO NOT pass the `CREATE`, `INSERT` queries in `add` function as they will result in not adding any data, so it is pointless.

3. We automatically create a chunker to chunk your postgres data, however if you wish to provide your own chunker class. Here is how you can do that:

```Python

from embedchain.chunkers.postgres import PostgresChunker

from embedchain.config.add_config import ChunkerConfig

postgres_chunker_config = ChunkerConfig(chunk_size=1000, chunk_overlap=0, length_function=len)

postgres_chunker = PostgresChunker(config=postgres_chunker_config)

app.add("SELECT * FROM table_name;", data_type='postgres', loader=postgres_loader, chunker=postgres_chunker)

```

# ❓💬 Question and answer pair

QnA pair is a local data type. To supply your own QnA pair, use the data\_type as `qna_pair` and enter a tuple. Eg:

```python

from embedchain import App

app = App()

app.add(("Question", "Answer"), data_type="qna_pair")

```

# 🗺️ Sitemap

Add all web pages from an xml-sitemap. Filters non-text files. Use the data\_type as `sitemap`. Eg:

```python

from embedchain import App

app = App()

app.add('https://example.com/sitemap.xml', data_type='sitemap')

```

# 🤖 Slack

## Pre-requisite

* Download required packages by running `pip install --upgrade "embedchain[slack]"`.

* Configure your slack bot token as environment variable `SLACK_USER_TOKEN`.

* Find your user token on your [Slack Account](https://api.slack.com/authentication/token-types)

* Make sure your slack user token includes [search](https://api.slack.com/scopes/search:read) scope.

## Example

### Get Started

This will automatically retrieve data from the workspace associated with the user's token.

```python

import os

from embedchain import App

os.environ["SLACK_USER_TOKEN"] = "xoxp-xxx"

app = App()

app.add("in:general", data_type="slack")

result = app.query("what are the messages in general channel?")

print(result)

```

### Customize your SlackLoader

1. Setup the Slack loader by configuring the Slack Webclient.

```Python

from embedchain.loaders.slack import SlackLoader

os.environ["SLACK_USER_TOKEN"] = "xoxp-*"

config = {

'base_url': slack_app_url,

'headers': web_headers,

'team_id': slack_team_id,

}

loader = SlackLoader(config)

```

NOTE: you can also pass the `config` with `base_url`, `headers`, `team_id` to setup your SlackLoader.

2. Once you setup the loader, you can create an app and load data using the above slack loader

```Python

import os

from embedchain.pipeline import Pipeline as App

app = App()

app.add("in:random", data_type="slack", loader=loader)

question = "Which bots are available in the slack workspace's random channel?"

# Answer: The available bot in the slack workspace's random channel is the Embedchain bot.

```

3. We automatically create a chunker to chunk your slack data, however if you wish to provide your own chunker class. Here is how you can do that:

```Python

from embedchain.chunkers.slack import SlackChunker

from embedchain.config.add_config import ChunkerConfig

slack_chunker_config = ChunkerConfig(chunk_size=1000, chunk_overlap=0, length_function=len)

slack_chunker = SlackChunker(config=slack_chunker_config)

app.add(slack_chunker, data_type="slack", loader=loader, chunker=slack_chunker)

```

# 📝 Substack

To add any Substack data sources to your app, just add the main base url as the source and set the data\_type to `substack`.

```python

from embedchain import App

app = App()

# source: for any substack just add the root URL

app.add('https://www.lennysnewsletter.com', data_type='substack')

app.query("Who is Brian Chesky?")

# Answer: Brian Chesky is the co-founder and CEO of Airbnb.

```

# 📝 Text

### Text

Text is a local data type. To supply your own text, use the data\_type as `text` and enter a string. The text is not processed, this can be very versatile. Eg:

```python

from embedchain import App

app = App()

app.add('Seek wealth, not money or status. Wealth is having assets that earn while you sleep. Money is how we transfer time and wealth. Status is your place in the social hierarchy.', data_type='text')

```

Note: This is not used in the examples because in most cases you will supply a whole paragraph or file, which did not fit.

# 🌐 HTML Web page

To add any web page, use the data\_type as `web_page`. Eg:

```python

from embedchain import App

app = App()

app.add('a_valid_web_page_url', data_type='web_page')

```

# 🧾 XML file

### XML file

To add any xml file, use the data\_type as `xml`. Eg:

```python

from embedchain import App

app = App()

app.add('content/data.xml')

```

Note: Only the text content of the xml file will be added to the app. The tags will be ignored.

# 📽️ Youtube Channel

## Setup

Make sure you have all the required packages installed before using this data type. You can install them by running the following command in your terminal.

```bash

pip install -U "embedchain[youtube]"

```

## Usage

To add all the videos from a youtube channel to your app, use the data\_type as `youtube_channel`.

```python

from embedchain import App

app = App()

app.add("@channel_name", data_type="youtube_channel")

```

# 📺 Youtube Video

## Setup

Make sure you have all the required packages installed before using this data type. You can install them by running the following command in your terminal.

```bash

pip install -U "embedchain[youtube]"

```

## Usage

To add any youtube video to your app, use the data\_type as `youtube_video`. Eg:

```python

from embedchain import App

app = App()

app.add('a_valid_youtube_url_here', data_type='youtube_video')

```

# 🧩 Embedding models

## Overview

Embedchain supports several embedding models from the following providers:

## OpenAI

To use OpenAI embedding function, you have to set the `OPENAI_API_KEY` environment variable. You can obtain the OpenAI API key from the [OpenAI Platform](https://platform.openai.com/account/api-keys).

Once you have obtained the key, you can use it like this:

```python main.py

import os

from embedchain import App

os.environ['OPENAI_API_KEY'] = 'xxx'

# load embedding model configuration from config.yaml file

app = App.from_config(config_path="config.yaml")

app.add("https://en.wikipedia.org/wiki/OpenAI")

app.query("What is OpenAI?")

```

```yaml config.yaml

embedder:

provider: openai

config:

model: 'text-embedding-3-small'

```

* OpenAI announced two new embedding models: `text-embedding-3-small` and `text-embedding-3-large`. Embedchain supports both these models. Below you can find YAML config for both:

```yaml text-embedding-3-small.yaml

embedder:

provider: openai

config:

model: 'text-embedding-3-small'

```

```yaml text-embedding-3-large.yaml

embedder:

provider: openai

config:

model: 'text-embedding-3-large'

```

## Google AI

To use Google AI embedding function, you have to set the `GOOGLE_API_KEY` environment variable. You can obtain the Google API key from the [Google Maker Suite](https://makersuite.google.com/app/apikey)

```python main.py

import os

from embedchain import App

os.environ["GOOGLE_API_KEY"] = "xxx"

app = App.from_config(config_path="config.yaml")

```

```yaml config.yaml

embedder:

provider: google

config:

model: 'models/embedding-001'

task_type: "retrieval_document"

title: "Embeddings for Embedchain"

```

For more details regarding the Google AI embedding model, please refer to the [Google AI documentation](https://ai.google.dev/tutorials/python_quickstart#use_embeddings).

## AWS Bedrock

To use AWS Bedrock embedding function, you have to set the AWS environment variable.

```python main.py

import os

from embedchain import App

os.environ["AWS_ACCESS_KEY_ID"] = "xxx"

os.environ["AWS_SECRET_ACCESS_KEY"] = "xxx"

os.environ["AWS_REGION"] = "us-west-2"

app = App.from_config(config_path="config.yaml")

```

```yaml config.yaml

embedder:

provider: aws_bedrock

config:

model: 'amazon.titan-embed-text-v2:0'

vector_dimension: 1024

task_type: "retrieval_document"

title: "Embeddings for Embedchain"

```

For more details regarding the AWS Bedrock embedding model, please refer to the [AWS Bedrock documentation](https://docs.aws.amazon.com/bedrock/latest/userguide/titan-embedding-models.html).

## Azure OpenAI

To use Azure OpenAI embedding model, you have to set some of the azure openai related environment variables as given in the code block below:

```python main.py

import os

from embedchain import App

os.environ["OPENAI_API_TYPE"] = "azure"

os.environ["AZURE_OPENAI_ENDPOINT"] = "https://xxx.openai.azure.com/"

os.environ["AZURE_OPENAI_API_KEY"] = "xxx"

os.environ["OPENAI_API_VERSION"] = "xxx"

app = App.from_config(config_path="config.yaml")

```

```yaml config.yaml

llm:

provider: azure_openai

config:

model: gpt-35-turbo

deployment_name: your_llm_deployment_name

temperature: 0.5

max_tokens: 1000

top_p: 1

stream: false

embedder:

provider: azure_openai

config:

model: text-embedding-ada-002

deployment_name: you_embedding_model_deployment_name

```

You can find the list of models and deployment name on the [Azure OpenAI Platform](https://oai.azure.com/portal).

## GPT4ALL

GPT4All supports generating high quality embeddings of arbitrary length documents of text using a CPU optimized contrastively trained Sentence Transformer.

```python main.py

from embedchain import App

# load embedding model configuration from config.yaml file

app = App.from_config(config_path="config.yaml")

```

```yaml config.yaml

llm:

provider: gpt4all

config:

model: 'orca-mini-3b-gguf2-q4_0.gguf'

temperature: 0.5

max_tokens: 1000

top_p: 1

stream: false

embedder:

provider: gpt4all

```

## Hugging Face

Hugging Face supports generating embeddings of arbitrary length documents of text using Sentence Transformer library. Example of how to generate embeddings using hugging face is given below:

```python main.py

from embedchain import App

# load embedding model configuration from config.yaml file

app = App.from_config(config_path="config.yaml")

```

```yaml config.yaml

llm:

provider: huggingface

config:

model: 'google/flan-t5-xxl'

temperature: 0.5

max_tokens: 1000

top_p: 0.5

stream: false

embedder:

provider: huggingface

config:

model: 'sentence-transformers/all-mpnet-base-v2'

model_kwargs:

trust_remote_code: True # Only use if you trust your embedder

```

## Vertex AI

Embedchain supports Google's VertexAI embeddings model through a simple interface. You just have to pass the `model_name` in the config yaml and it would work out of the box.

```python main.py

from embedchain import App

# load embedding model configuration from config.yaml file

app = App.from_config(config_path="config.yaml")

```

```yaml config.yaml

llm:

provider: vertexai

config:

model: 'chat-bison'

temperature: 0.5

top_p: 0.5

embedder:

provider: vertexai

config:

model: 'textembedding-gecko'

```

## NVIDIA AI

[NVIDIA AI Foundation Endpoints](https://www.nvidia.com/en-us/ai-data-science/foundation-models/) let you quickly use NVIDIA's AI models, such as Mixtral 8x7B, Llama 2 etc, through our API. These models are available in the [NVIDIA NGC catalog](https://catalog.ngc.nvidia.com/ai-foundation-models), fully optimized and ready to use on NVIDIA's AI platform. They are designed for high speed and easy customization, ensuring smooth performance on any accelerated setup.

### Usage

In order to use embedding models and LLMs from NVIDIA AI, create an account on [NVIDIA NGC Service](https://catalog.ngc.nvidia.com/).

Generate an API key from their dashboard. Set the API key as `NVIDIA_API_KEY` environment variable. Note that the `NVIDIA_API_KEY` will start with `nvapi-`.

Below is an example of how to use LLM model and embedding model from NVIDIA AI:

```python main.py

import os

from embedchain import App

os.environ['NVIDIA_API_KEY'] = 'nvapi-xxxx'

config = {

"app": {

"config": {

"id": "my-app",

},

},

"llm": {

"provider": "nvidia",

"config": {

"model": "nemotron_steerlm_8b",

},

},

"embedder": {

"provider": "nvidia",

"config": {

"model": "nvolveqa_40k",

"vector_dimension": 1024,

},

},

}

app = App.from_config(config=config)

app.add("https://www.forbes.com/profile/elon-musk")

answer = app.query("What is the net worth of Elon Musk today?")

# Answer: The net worth of Elon Musk is subject to fluctuations based on the market value of his holdings in various companies.

# As of March 1, 2024, his net worth is estimated to be approximately $210 billion. However, this figure can change rapidly due to stock market fluctuations and other factors.

# Additionally, his net worth may include other assets such as real estate and art, which are not reflected in his stock portfolio.

```

## Cohere

To use embedding models and LLMs from COHERE, create an account on [COHERE](https://dashboard.cohere.com/welcome/login?redirect_uri=%2Fapi-keys).

Generate an API key from their dashboard. Set the API key as `COHERE_API_KEY` environment variable.

Once you have obtained the key, you can use it like this:

```python main.py

import os

from embedchain import App

os.environ['COHERE_API_KEY'] = 'xxx'

# load embedding model configuration from config.yaml file

app = App.from_config(config_path="config.yaml")

```

```yaml config.yaml

embedder:

provider: cohere

config:

model: 'embed-english-light-v3.0'

```

* Cohere has few embedding models: `embed-english-v3.0`, `embed-multilingual-v3.0`, `embed-multilingual-light-v3.0`, `embed-english-v2.0`, `embed-english-light-v2.0` and `embed-multilingual-v2.0`. Embedchain supports all these models. Below you can find YAML config for all:

```yaml embed-english-v3.0.yaml

embedder:

provider: cohere

config:

model: 'embed-english-v3.0'

vector_dimension: 1024

```

```yaml embed-multilingual-v3.0.yaml

embedder:

provider: cohere

config:

model: 'embed-multilingual-v3.0'

vector_dimension: 1024

```

```yaml embed-multilingual-light-v3.0.yaml

embedder:

provider: cohere

config:

model: 'embed-multilingual-light-v3.0'

vector_dimension: 384

```

```yaml embed-english-v2.0.yaml

embedder:

provider: cohere

config:

model: 'embed-english-v2.0'

vector_dimension: 4096

```

```yaml embed-english-light-v2.0.yaml

embedder:

provider: cohere

config:

model: 'embed-english-light-v2.0'

vector_dimension: 1024

```

```yaml embed-multilingual-v2.0.yaml

embedder:

provider: cohere

config:

model: 'embed-multilingual-v2.0'

vector_dimension: 768

```